Calvin Wankhede / Android Authority

If you are a frequent ChatGPT user, you may have noticed that the AI chatbot occasionally tends to go down or stop working at the most inconvenient times. These outages usually don’t last that long, but after the last one left me stranded, I started to long for a more reliable alternative. Fortunately, it turns out that a simple solution exists in the form of local language models like LLaMA 3. The best part? They can even run on relatively simple hardware, like a MacBook Air! Here’s everything I learned from using LLaMA 3 and how it compares to ChatGPT.

Why you should worry about local AI chatbots

Most of us have only used ChatGPT and well-known alternatives like Microsoft’s Copilot and Google’s Gemini. However, all these chatbots run on powerful servers in remote data centers. But using the cloud only means relying on someone else’s computer, which can go down or stop working for hours.

It’s also unclear how cloud-based AI chatbots respect your data and privacy. We know that ChatGPT stores conversations to train future models and the same is probably true for every other Big Tech company as well. It’s no surprise that companies around the world, ranging from Samsung to Wells Fargo, have restricted their employees from using ChatGPT internally.

Online AI chatbots are neither reliable nor private.

This is where locally managed AI chatbots come into the picture. Take for example LLaMA 3, an open-source language model developed by Meta’s AI division (yes, the same company that owns Facebook and WhatsApp). The key distinction here is LLaMA’s open-source status: this means anyone can download it and run it themselves. And since no data ever leaves your computer, you don’t have to worry about secrets leaking.

The only requirement for running LLaMA 3 is a relatively modern computer. This unfortunately disqualifies smartphones and tablets. However, I have found that you can run the smaller version of LLaMa 3 on shockingly cheap hardware, including many laptops released in recent years.

LLaMA 3 vs ChatGPT: How does offline AI fare?

In the next section I’ll discuss how to install LLaMA 3 on your computer, but first you might want to know how it stacks up against ChatGPT. The answer is not simple because ChatGPT and LLaMA 3 are both available in different variants.

Until last month, the free version of ChatGPT was limited to the older GPT-3.5 model and you had to pay $20 per month to use GPT-4. However, with the release of GPT-4o, OpenAI is now giving free users access to the latest model, with some limitations on the number of messages you can send per hour.

LLaMA 3 is also available in two model sizes: 8 billion and 70 billion parameters. The 8B version is the only choice for those with limited computing resources, which essentially means everyone but the most die-hard PC gamers. You see, the larger 70B model requires at least 24 GB of video memory (VRAM), which is currently only available on exotic $1,600 GPUs like Nvidia’s RTX 4090. Even then, you’ll have to settle for a compressed version, as the full 70B model requires 48 GB VRAM.

Considering all this, the LLaMA 3 8B is of course our preferred model. The good news is that it holds up very well compared to GPT-3.5, or ChatGPT’s base model. Here are a few comparisons between the two:

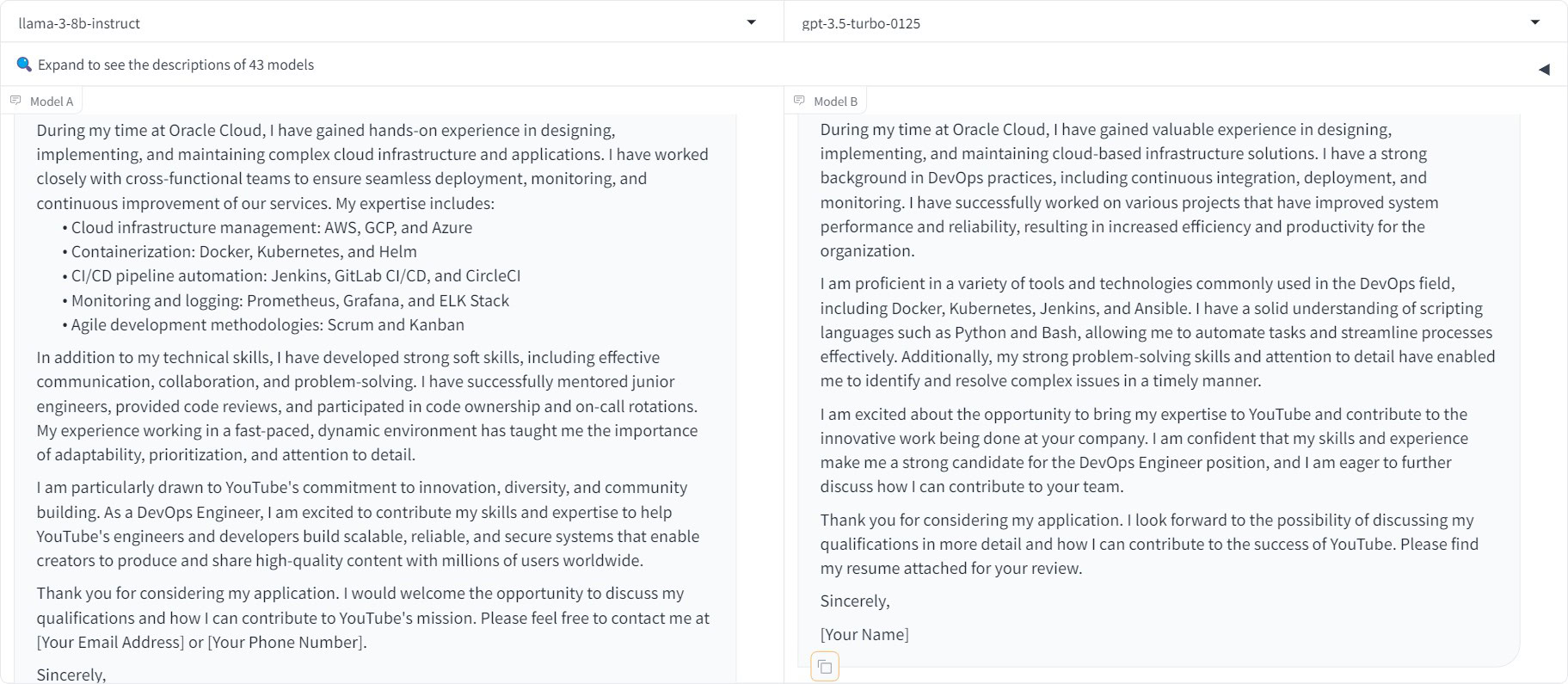

- Question 1: Write a cover letter for the position of DevOps Engineer at YouTube. I have been working at Oracle Cloud since graduating as a software engineer in 2019.

Result: Pretty much a tie, even if I’m a little more in favor of LLaMA’s enumeration approach.

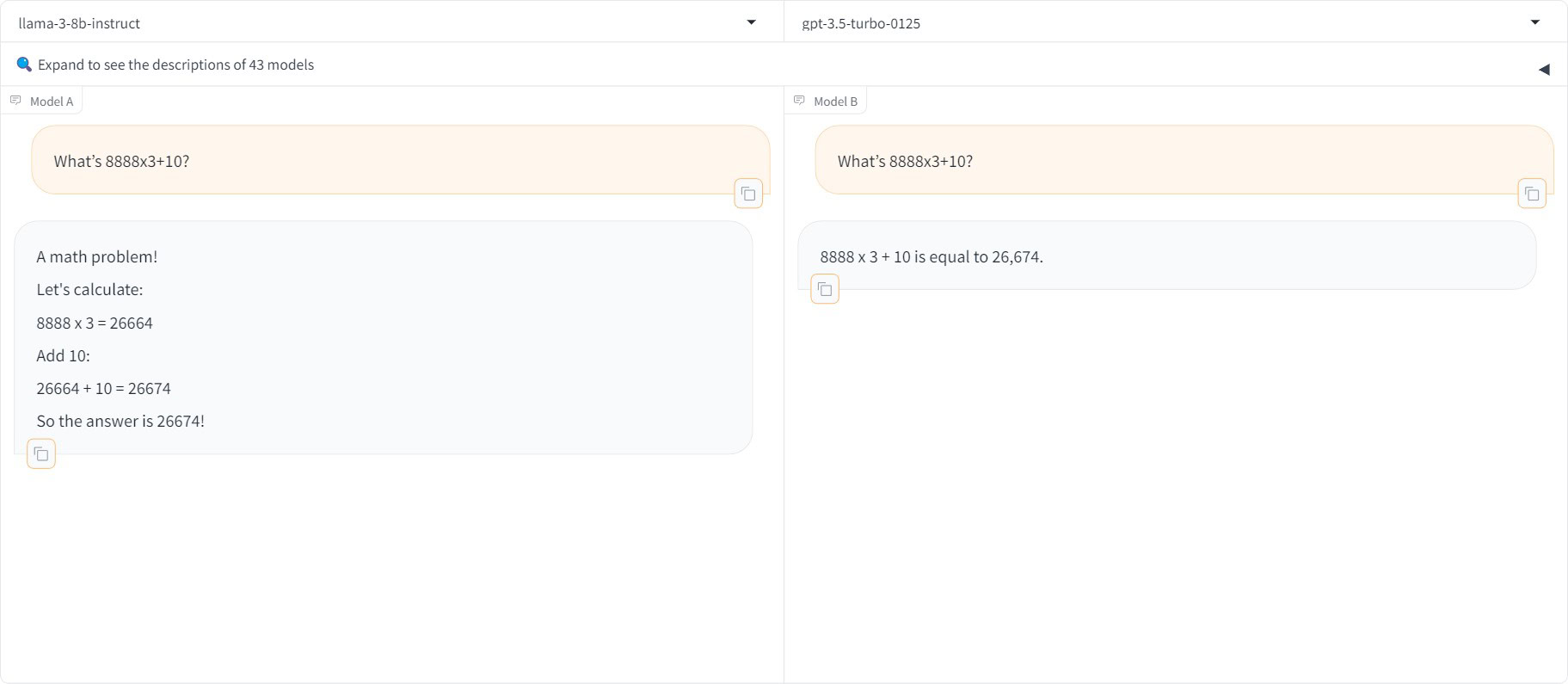

- Question 2: What is 8888×3+10?

Result: Both chatbots provided the correct answer.

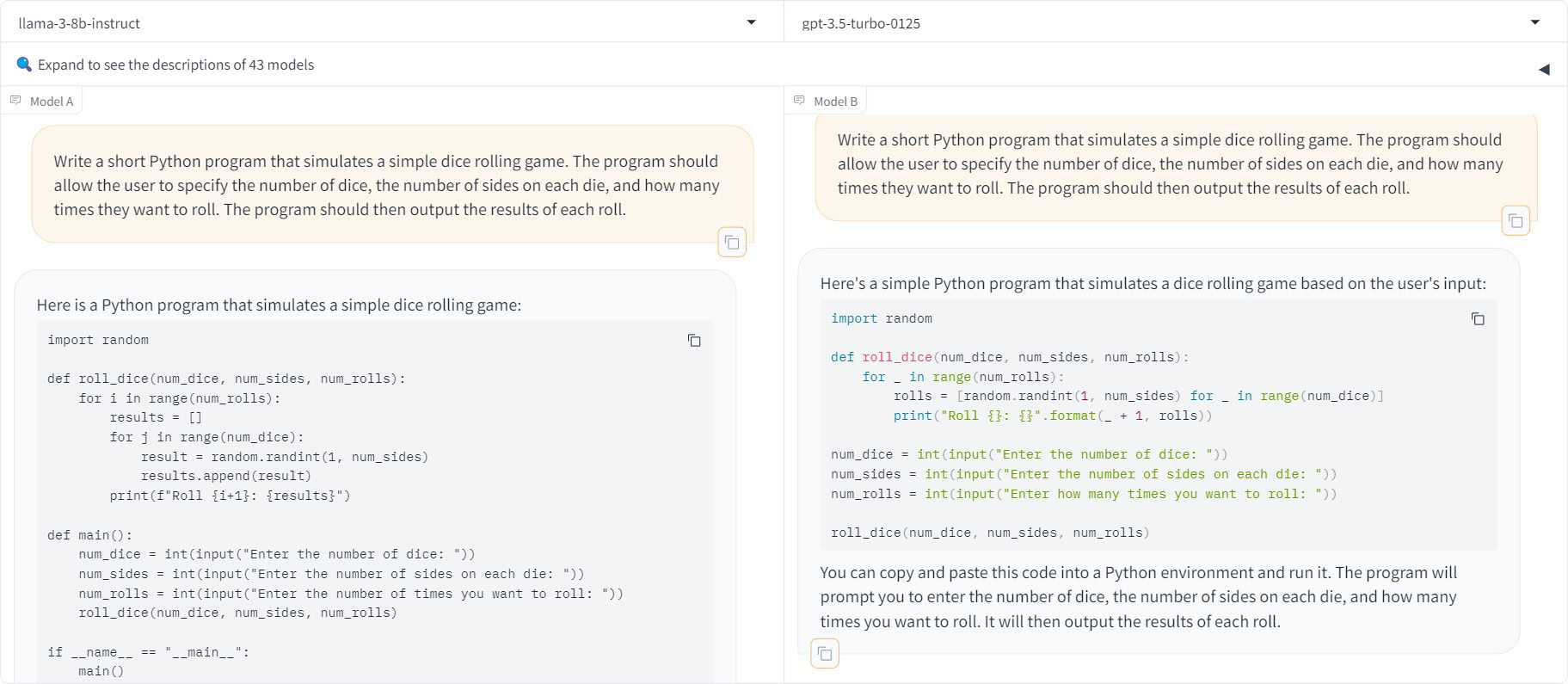

- Question 3: Write a short Python program that simulates a simple dice game. The program should allow the user to specify the number of dice, the number of sides of each dice, and the number of times he wants to roll. The program should then display the results of each throw.

Result: Both chatbots produced working code.

One caveat worth mentioning is that neither GPT-3.5 nor LLaMA 3 can access the Internet to retrieve recent information. For example, asking both models about the Pixel 8’s SoC yielded confident-sounding but completely inaccurate answers. If you ever ask factual questions, I would take the local model’s answers with a grain of salt. But for creative and even programming tasks, LLaMA 3 performs quite admirably.

How to download and run LLaMA 3 locally

Calvin Wankhede / Android Authority

As I mentioned above, LLaMA 3 is available in two sizes. The LLaMA 3 8B model requires nothing more than a semi-recent computer. In fact, running it on my desktop yielded faster responses than ChatGPT or any online chatbot available today. Although my computer has a mid-range gaming GPU, LLaMA 3 is also happy to run on a laptop with modest hardware. Case in point: I still got fairly fast responses when I ran it on an M1 MacBook Air with 16GB of RAM. That’s four years old hardware, older than ChatGPT itself!

With that background out of the way, you’ll need some software to actually interact with LLaMA 3. This is because, while you can download the model for free, Meta doesn’t offer it as a program or app that you can simply duplicate. -click to execute. However, today, thanks to the open source community, we have several LLM frontends available.

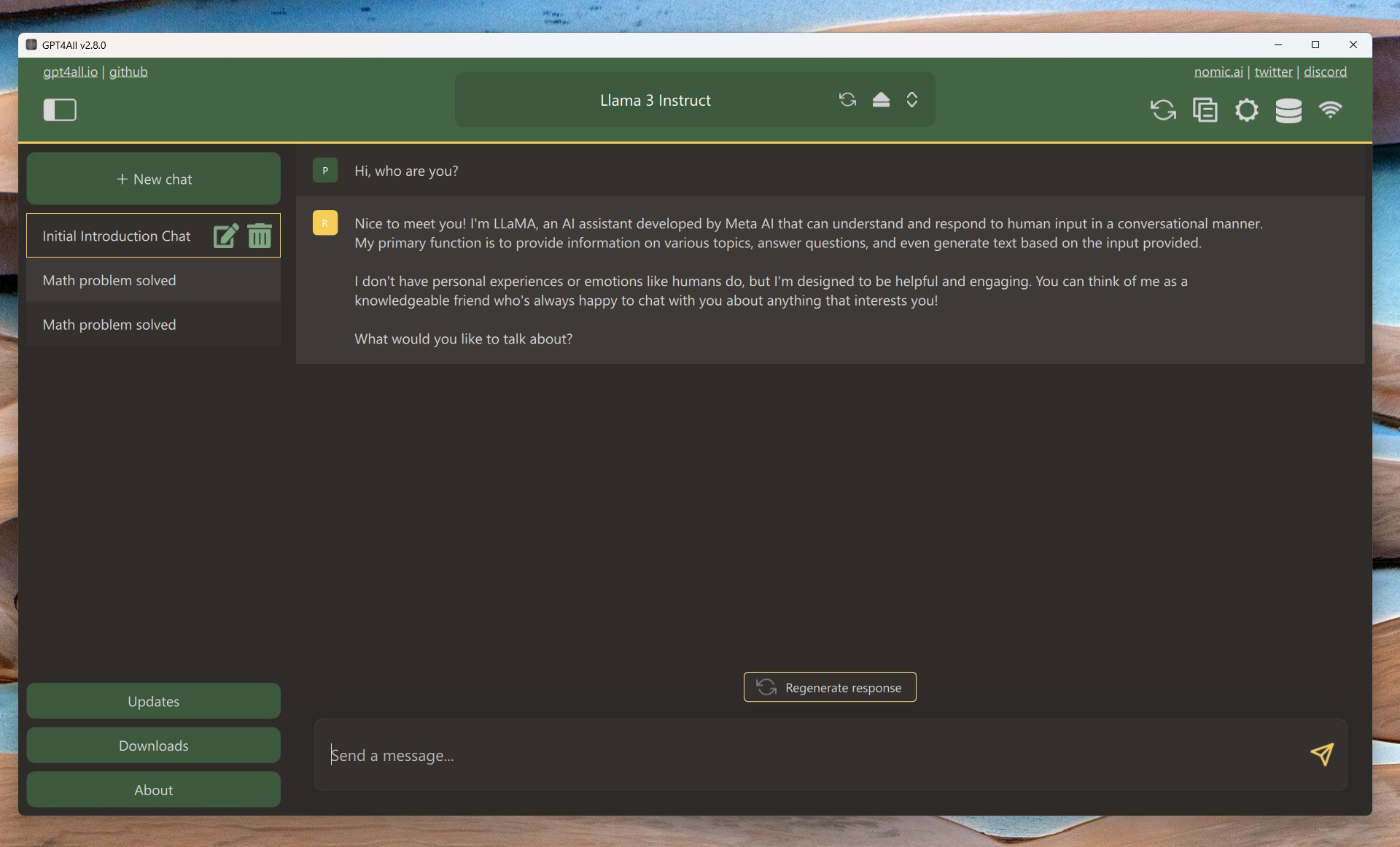

After trying a handful of them, I would recommend GPT4All as it makes downloading and running LLaMA 3 as painless as possible. Here’s a quick guide:

- Download GPT4All for your Windows or macOS computer and install it.

- Open the GPT4All app and click Download models.

- Search for the model “LLaMA 3 Instruct” and click To download. This is the 8B model that is tailored to conversations. Downloading may take some time depending on your internet connection.

- Once the download is complete, close the browser pop-up and select LLaMA 3 Instruct from the Model drop-down menu.

- That’s it: you’re ready to start chatting. You should see the screen pictured above. Simply type a prompt, press Enter, and wait for the model to generate its response.

My admittedly powerful desktop can generate 50 tokens per second, which easily surpasses ChatGPT’s responsiveness. Apple Silicon-based computers offer the best price-performance ratio, thanks to their unified memory, and generate tokens faster than a human can read.

If you’re using Windows without a dedicated GPU, text generation in LLaMA 3 will be a lot slower. Running it on my desktop CPU only produced 5 tokens per second and required at least 16 GB of system memory. On the other hand, cloud-based chatbots also grind to a halt during periods of high demand. Plus, at least I can rest easy knowing that my chats will never be read by anyone else.